A detailed look at running Kamal setup/deploy

Now that we have our Sinatra app up and running I want to do a quick run-through to talk about the simplicity of Kamal and how it’s really just a nice layer on top of some great open-source tools like Docker and Traefik.

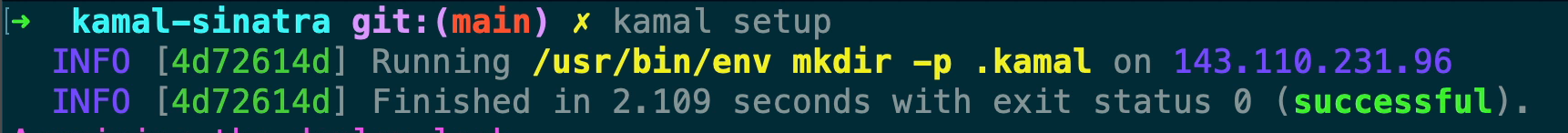

Whenever running a Kamal command it has a common output format of:

[LOG LEVEL] [COMMAND REFERENCE] [OUTPUT] on [server or localhost]

The COMMAND REFERENCE or SHA portion of the Kamal output is helpful when you need to follow one specific command to see its output, it’s not often but something to note. Also, Kamal has to run a few things on your local machine while the others will run on your server. If you’re using a remote builder, for instance, Kamal is going to call docker build locally with a buildx remote context that points to your server.

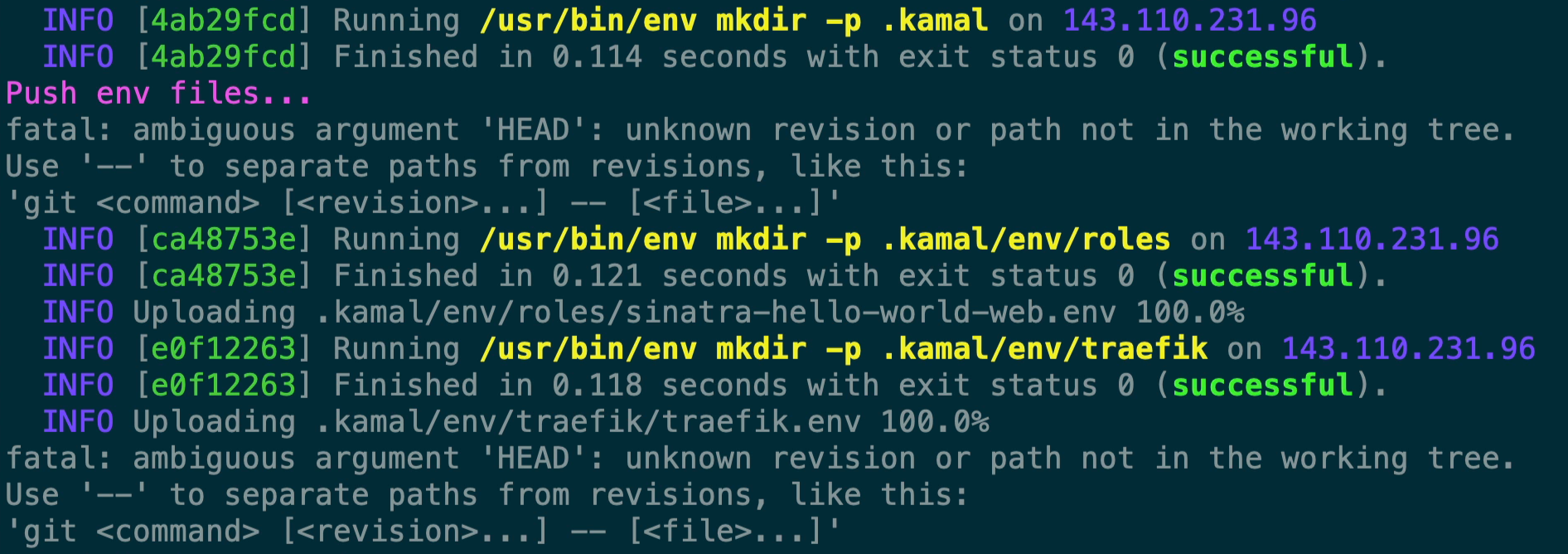

The first command that Kamal issues after we run kamal setup is to create the directory on your server where the Kamal configuration is going to go which by default is at ~/.kamal. We’ll come back to this.

At this point, since we’re running setup Kamal is going to acquire a lock for our setup run. Kamal does this by placing a small lock file on the server within that .kamal configuration directory, at the end of our setup run it’ll then remove that lock file from the server. If you take a look at the lock file you’ll see that it’s a base64 encoded string that contains the current lock details.

$ cat .kamal/lock-apps/details

TG9ja2VkIGJ5OiBOaWNrIEhhbW1vbmQgYXQgMjAyNC0wMS0yM1QyMjozOTo0

NloKVmVyc2lvbjogZGVmZDA4YWExMThjMWNkOGMzNzJhN2YxYjlhYjJmNjYw

ODlmNWIxN191bmNvbW1pdHRlZF8xMTM4MDVhNTI5NzY5NGZhCk1lc3NhZ2U6

IEF1dG9tYXRpYyBkZXBsb3kgbG9jaw==

Which Kamal will print out us as something like this if someone else attempts to deploy at the same time or while a deploy/setup command is happening, anything that actually makes changes on the server.

<<~DETAILS.strip

Locked by: #{locked_by} at #{Time.now.utc.iso8601}

Version: #{version}

Message: #{message}

DETAILS

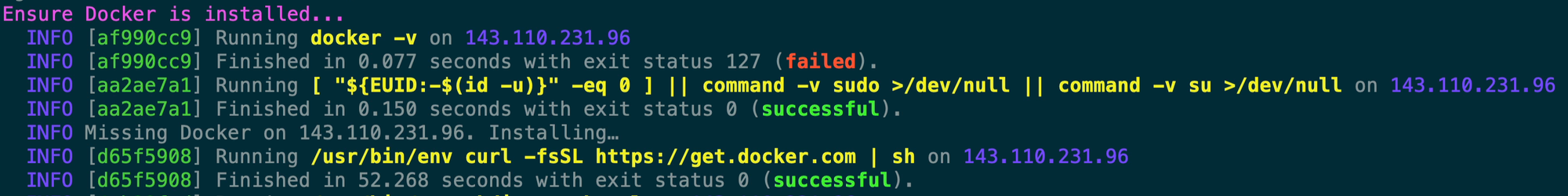

Next Kamal runs docker -v to check if Docker is installed. Kamal doesn’t explicitly require a specific version of Docker but something fairly recent is helpful. You’ll notice that the docker -v command fails which means Kamal can’t find Docker and then it starts the Docker install process. As you can see it’s the typical curl command pointed at get.docker.com that you’d use to install on a Linux server.

Next, you’ll see it’s pushing up a file for our web service and traefik configuration to .kamal/env. You’ll notice it’s utilizing our service name and then the server role which is web or #{service}-#{role}.env to sinatra-hello-world-web.env. A nice feature of Kamal is that it only pushes the required variables to the .env file on the server, it’s not just blanket pushing your .env file up to your server for everything. For instance, since you most likely won’t ever add KAMAL_REGISTRY_PASSWORD within your deploy.yml, it’ll never be in your .env files. Kamal also only pushes the required variables for the specific role, so your web role is only going to have the env variables that you’ve mapped within your env configuration in deploy.yml and that’s it.

Ignore the fatal lines in here as that’s related to git, since this was for a blog post I hadn’t initialized the git repository yet.

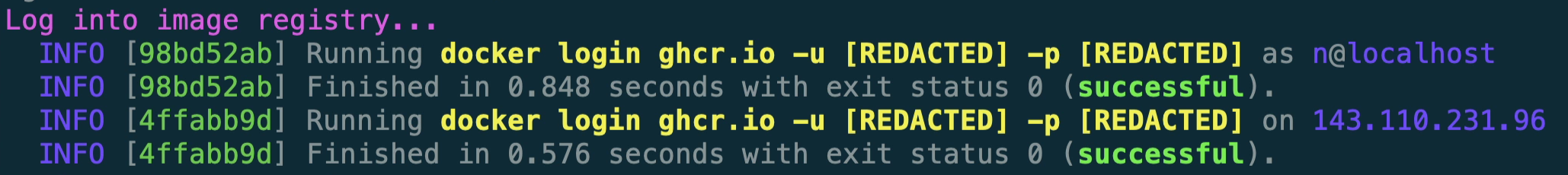

Next, it utilizes our registry configuration in our deploy.yml to check that our credentials are valid and we can log in to our registry.

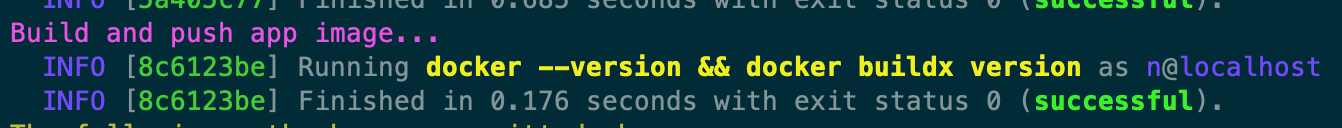

After that, since we’re running kamal setup instead of kamal deploy it’s going to then check if buildx is available. buildx utilizes Docker’s buildkit to add to Docker’s basic build functionality.

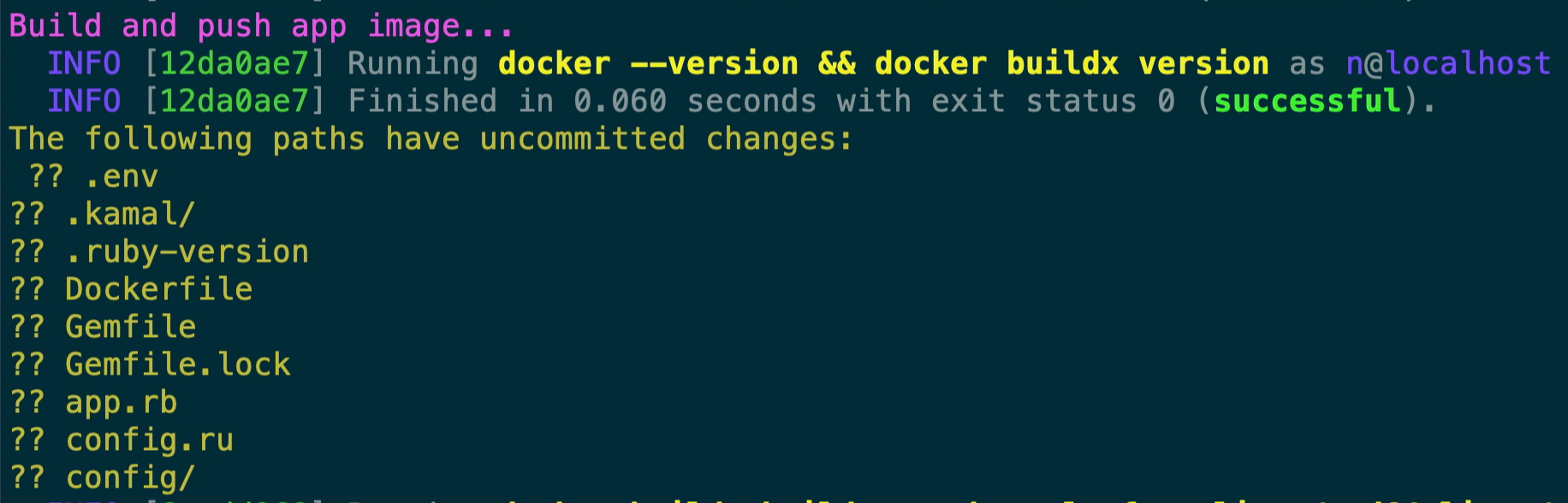

Next, you can see that Kamal is helping to highlight that we have a dirty git tree.

This is important because Kamal builds the Docker image based on the current state of the files from where it’s running from, your machine. So if you’ve made a change to one of your application files, that change is going to get built into the container that you’re deploying. This is great when you’re first getting Kamal going so that you can quickly iterate and get your application deployed, you don’t have to commit and push your changes each time. Later on though when your application is fully hosted you can actually prevent a deployment from going through by utilizing Kamal’s hooks, the sample hook(pre-build) that was created with kamal init highlights how to do that.

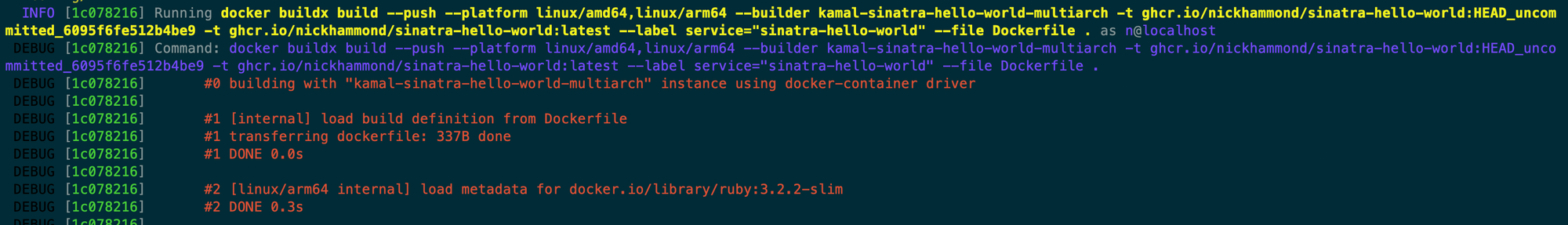

After that, you can see that Kamal is going to build our container and pass in some of the details that we’ve provided within deploy.yml as well as a few other things.

Here’s a quick overview of the arguments for the build step.

--push- Kamal utilizes the push flag so that it’s a build and push. Instead of two steps which would be; build, and then push.--platform linux/amd64,linux/arm64- Kamal by default builds for multiple architectures, usually AMD and ARM(apple silicon). I find it easier and faster to build for the target deployed architecture and also via the remote builder which is faster all around. Having both architectures in here also means that Docker is going to build your container twice, if you look at the output you’ll see for instance that it’s runningbundle installtwice.--builder kamal-sinatra-hello-world-multiarch- Kamal configures a builder for the buildx build by utilizing your builder configuration in deploy.yml. There are a few settings related to the builder that you can customize such as single or multi-arch, local or remote, and the Dockerfile location. This builder is something that Kamal creates for you automatically and when you’re first getting things setup you’ll sometimes need to reset your build configuration which you can do with akamal build remove.-t ghcr.io/nickhammond/sinatra-hello-world:HEAD_uncommitted_6095f6fe512b4be9- Kamal tags our container with our registry location and a sha. Normally this will be a simple SHA but since we have a dirty tree you’ll see it hasuncommittedin there and then a random SHA. This is another reason that you’ll eventually want to prevent dirty deploys from going out so that your sha easily maps to your repository’s SHAs.-t ghcr.io/nickhammond/sinatra-hello-world:latest- Just a defaultlatesttag.--label service="sinatra-hello-world"- Kamal can deploy multiple services to the same host so everything is named and tied back to the service that you’re deploying.--file Dockerfile .- Kamal builds based on the defaultDockerfilebut you can customize that for instance to aDockerfile.prodif you’d like within your builder configuration and the ‘dockerfile’ option.

The rest of the red output is your docker build output. I’m not going to run through that because it’s dependent on what’s in your Dockerfile.

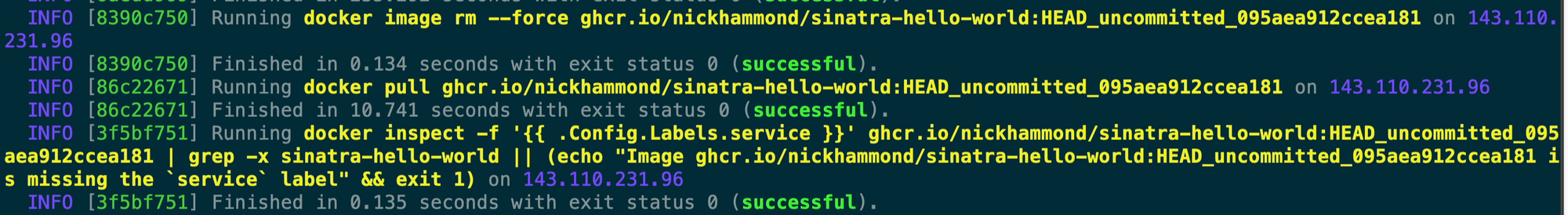

This next step is ensuring that our Docker image has a service label that matches the service we’ve set within deploy.yml. This is a simple validation step to ensure the rest of the image configuration is correct within your setup. I believe this is also if you passed the --skip-push option that the image it pulled down is indeed for the same service.

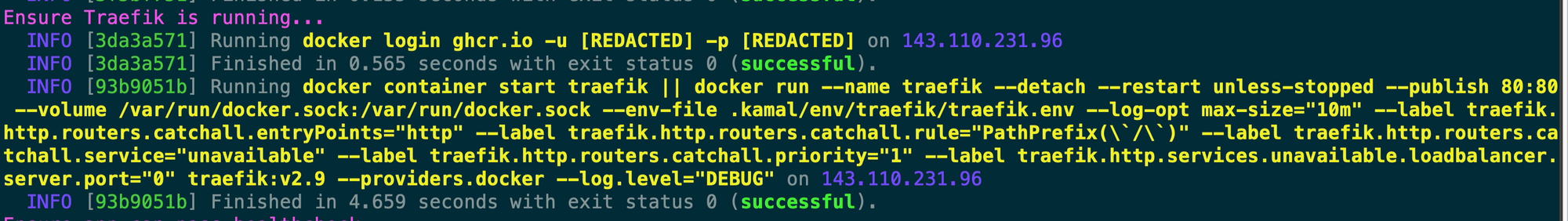

After that Kamal checks that traefik is running which will be the web proxy that runs on port 80 and forwards requests to your web service(s). You’ll notice that it’s passing in the .env file for traefik that it pushed earlier in the deployment and then it’s setting up a default entrypoint for http requests to respond with an unavailable response. Once Kamal adds in your web service though there will be an entrypoint for that which will take precedence over the default unavailable response.

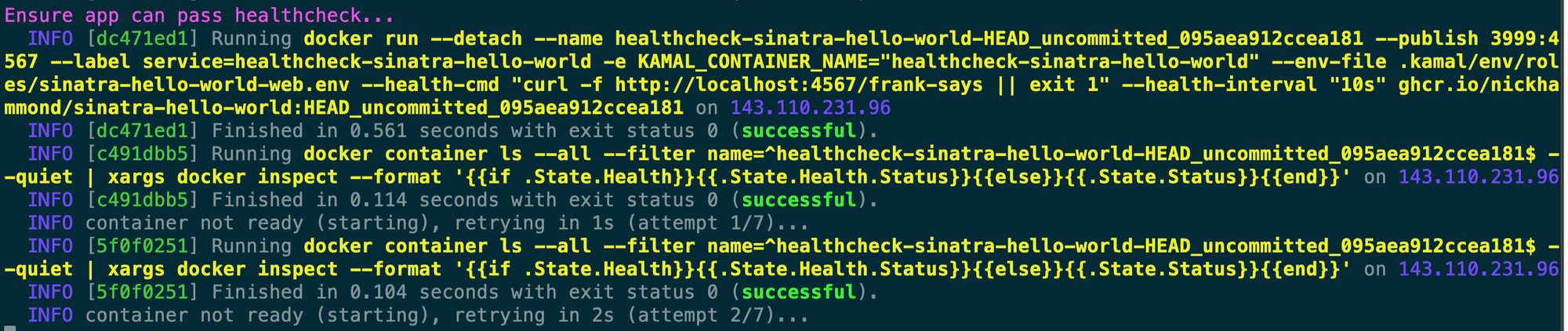

Next, you can see that Kamal is running our container with the custom healthcheck we set along with a few other things. Again you’ll notice it’s using that .env file that it pushed up to our server at the beginning of the run. This step is a little confusing because it’s going to run the healthcheck again a little bit later but I’ll explain why in a bit.

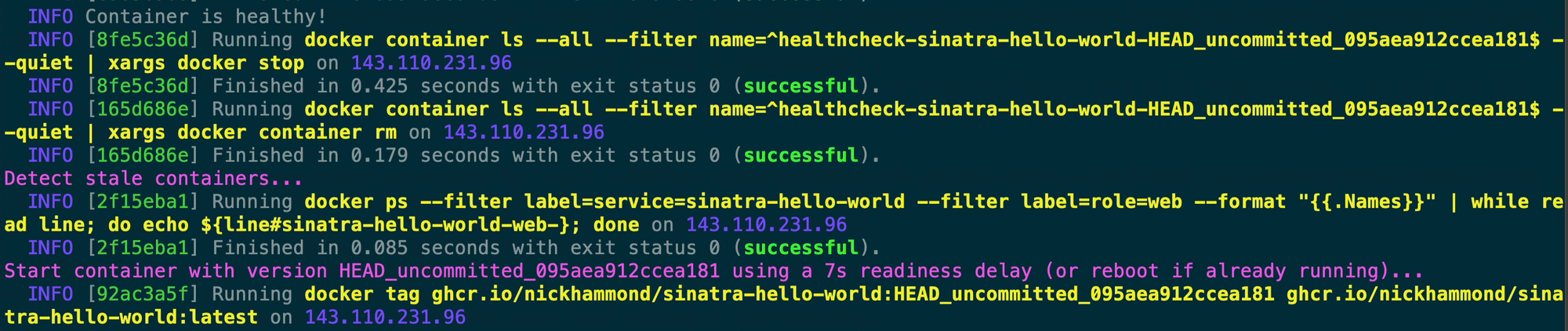

After a few more healthcheck attempts you’ll see that Kamal mentions that the “Container is healthy!” and then proceeds to tear down the healthcheck container. What Kamal is doing here is ensuring that your app will actually boot before starting the rolling deploy process later.

You’ll also notice that since Kamal has determined our container is good for release that it tags that version of the container with the latest tag.

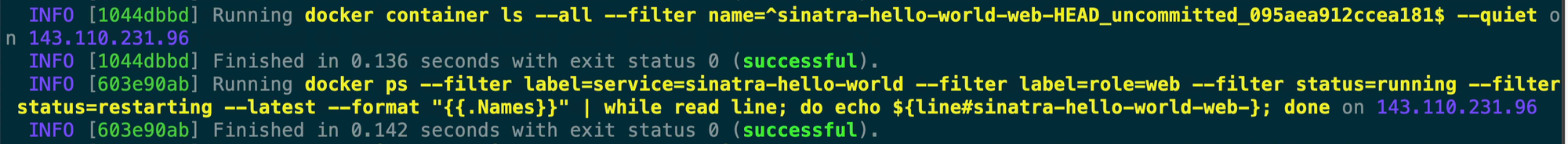

From here these next steps are fetching the old container ID for our app. This will later be utilized to figure out which container Kamal needs to send a stop signal to.

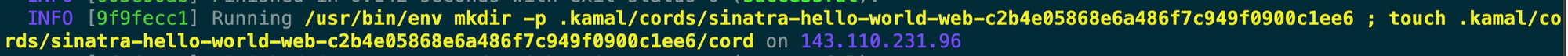

This next step is a simple concept that can seem a bit confusing at first but Kamal uses the concept of a ‘cord’ to get containers to show up as unhealthy so that Traefik stops sending traffic to it. Think of the cord as a literal and physical cord connected to your container, just a good ol’ RJ45 cable connected to your container. Kamal then ‘ties’ the cord in and then ‘cuts’ the cord out when it wants to spin down a container. The cord file is an empty file stored within the .kamal configuration directory.

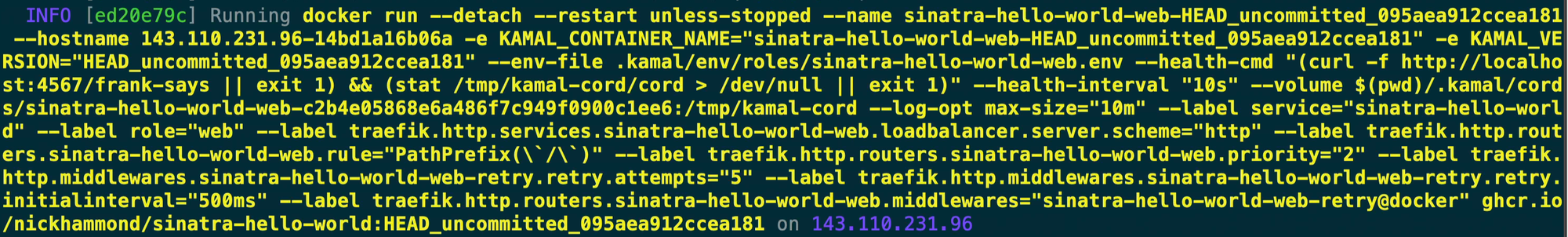

After that, you’ll see that Kamal boots up our container and I want to highlight a few things from this docker run since it’s a long one.

- You’ll notice that it’s passing in our env file that was pushed up earlier via

--env-file. - Our custom healthcheck command has been rewritten a bit. It’s utilizing that

/frank-saysendpoint that I configured for the Sinatra Kamal demo but it’s also looking for the cord file with thestatcommand. Kamal mounts our cord file within our container at/tmp/kamal-cordby default and then combines the healthcheck to check the endpoint and check for the cord, this makes it easier to rip the cord out later and get the healthcheck to fail for Traefik and Docker. - There are various Traefik configuration details passed in as well and you can override all of these but it’s basically saying send traffic to this container over http with a path prefix of

/.

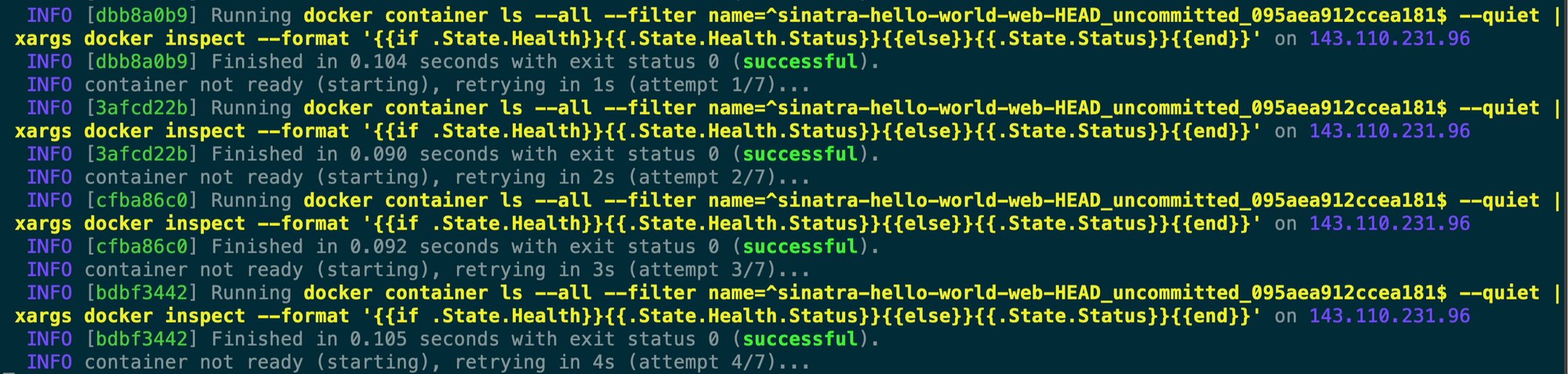

Once that command completes then Kamal starts watching for our container to show up as healthy which would mean that the curl to http://localhost:4567/frank-says is working and that it can see the cord file.

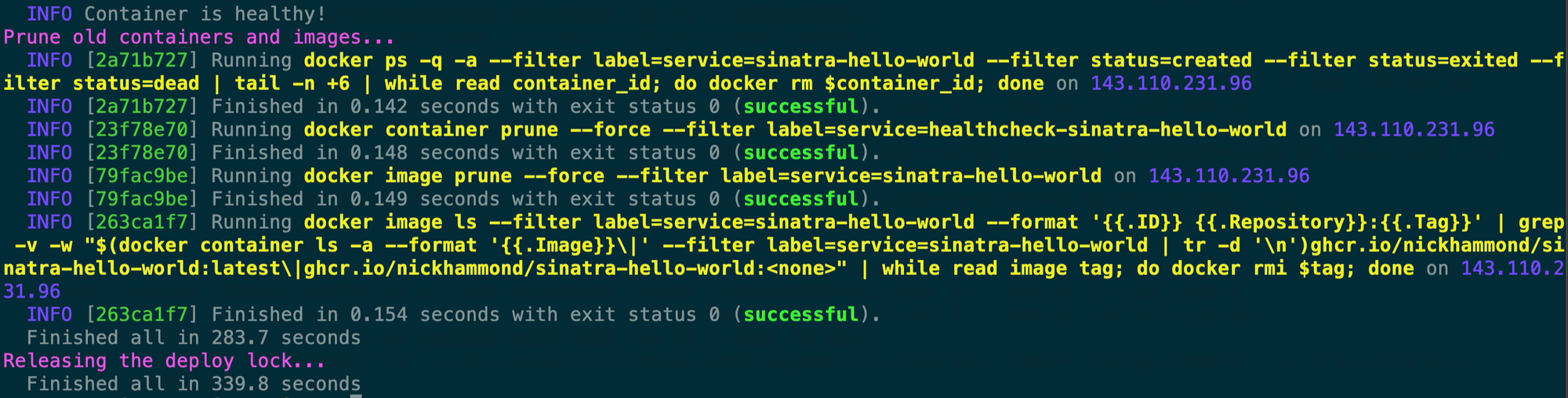

Once our container comes online as healthy then Kamal goes through and prunes the old containers and images by looking for the previous container ID that it looked up before.

After it’s done cleaning up our old images Kamal releases the deploy lock and we’re live.

I wanted to run through this full output because it’s fairly easy to understand. The analogy that Kamal is Capistrano for containers really rings true when you look at all of this output. For example, instead of switching the deployed version via a symlink like you do with Capistrano it’s just rotating out the containers via the SHA of the application and bringing the new one online. There’s no magical service resolution process, it’s just building a container, and stopping/starting a new container in its place.